From Good to Great: The Advantages of Upscaling from Tier-2 to Tier-0 for Research

Costly and not scalable labelling in machine learning

Machine learning [1] covers a wide range of methods for computers to learn how to do things without being explicitly programmed: it teaches a computer to learn and improve from experience, just like humans do. Supervised learning is a type of machine learning where the computer is trained using labelled data. For example, the data might consist of pictures of animals with labels indicating which animal is in the image. The computer uses this labelled data to learn how to identify each animal on its own. Once the computer has been trained using the labelled data, it can make predictions on new, unlabelled data. For example, if you give the computer a picture of an animal it hasn't seen before, it can use what it learned from the labelled data to predict what animal it might be.

This type of machine learning gives excellent results in many applications, but the labelling is costly and not scalable.

Improving AI algorithms with supercomputing

The Processing Speech and Images (PSI) Group at KU Leuven performs demand-driven research in image and audio processing. Through his research, Tim Lebailly, PhD student at PSI, aims to improve current machine algorithms for AI.

In the new branch of research called self-supervised learning, the computer is trained to learn from its own data without needing explicit and costly labels or supervision. However, to compensate for the lack of labels, the model must be trained with even larger quantities of data. This is where supercomputing comes into play.

Tim Lebailly (PSI, KU Leuven): ‘When using supercomputing, the processing of huge image datasets can be parallelised on many GPUs, which is crucial to reduce the runtime to reasonable values, e.g., days. A laptop cannot even store the model and its gradients in memory. Even if that were the case, training our model on a laptop CPU would take many years. One run of our method will process about 3 billion images throughout the whole training.’

Scaling up from Tier-2 to Tier-1 supercomputer Hortense

Tim used the Tier-2 infrastructure of VSC to conduct his research in the past, but more computational resources were required, so he applied for compute time on the Tier-1 supercomputer Hortense of VSC.

Tim Lebailly (PSI, KU Leuven): “Since this field of self-supervised learning is so compute-intensive, our main competitors are not from academia but rather from large companies like Google, Facebook and Microsoft, which have access to massive compute resources. Supercomputers like Hortense in Ghent are crucial tools to allow researchers from academia to work in this highly competitive field.”

According to Tim, moving up from Tier-2 to Hortense was very straightforward: “It’s very similar hardware. Only the scheduler is different, but that’s a detail.”

Taking research to the next level with LUMI

Tim had to conduct numerous experiments in parallel. If he attempted this while using Tier-1, he used the entire GPU partition exclusively, causing some jobs to remain in the queue for a long time. The next logical step for Tim was to apply for compute time on LUMI [2], the fastest Tier-0 supercomputer in Europe.

Tim Lebailly (PSI, KU Leuven): “LUMI is great for Belgium as it allows users to get large amounts of compute. For example, during the pilot phase on LUMI, I ran an experiment over four days, equal to a bit more than my full allocation on Hortense for eight months! This allowed me to scale up my research to state-of-the-art neural networks.”

Since Belgium is part of the LUMI consortium, Belgian researchers could apply to participate in pilot phases before LUMI was fully operational. Tim participated in the second pilot phase of LUMI. This phase aimed to test the GPU partition's scalability and generate workloads on the GPUs [3].

Tim Lebailly (PSI, KU Leuven): “Thanks to the scale of LUMI, I only utilise a small fraction of the supercomputer's capacity, enabling me to schedule all my jobs simultaneously. In that regard, the user experience is really nice.”

Smoothly moving from Tier-1 to Tier-0

However, Tim also faced some hurdles using LUMI: “The supercomputer uses AMD GPUs instead of the more frequently used NVIDIA GPUs. Since this is so new, documentation resources online are limited. So, getting my machine learning workloads running on the AMD GPUs took some persistence. Apart from that, using a Tier-0 supercomputer is quite similar to using Tier-1, as I connected to the login node and used Slurm to submit jobs, just as I did before. So, moving from Tier-1 to Tier-0 was a smooth experience.”

The potential impact of self-supervised learning in industries relying on vision data

Tim's research could be applied in many industries that rely on vision data, like scene understanding for self-driving cars or robotic surgeries.

Tech companies are particularly interested in self-supervised learning because it is an up-and-coming field for AI. Natural language processing (NLP) has seen breakthroughs in the last few years, making products like Google Translate feel more human-like. The breakthroughs are yet to come in Computer Vision, but the progress is highly promising.

Relevant papers:

- Paper ‘Global-Local Self-Distillation for Visual Representation Learning.’

Computational resources and services used in this work were provided by the VSC (Tier-1 Supercomputer Hortence Flemish Supercomputer Center)

This paper was accepted to the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV).

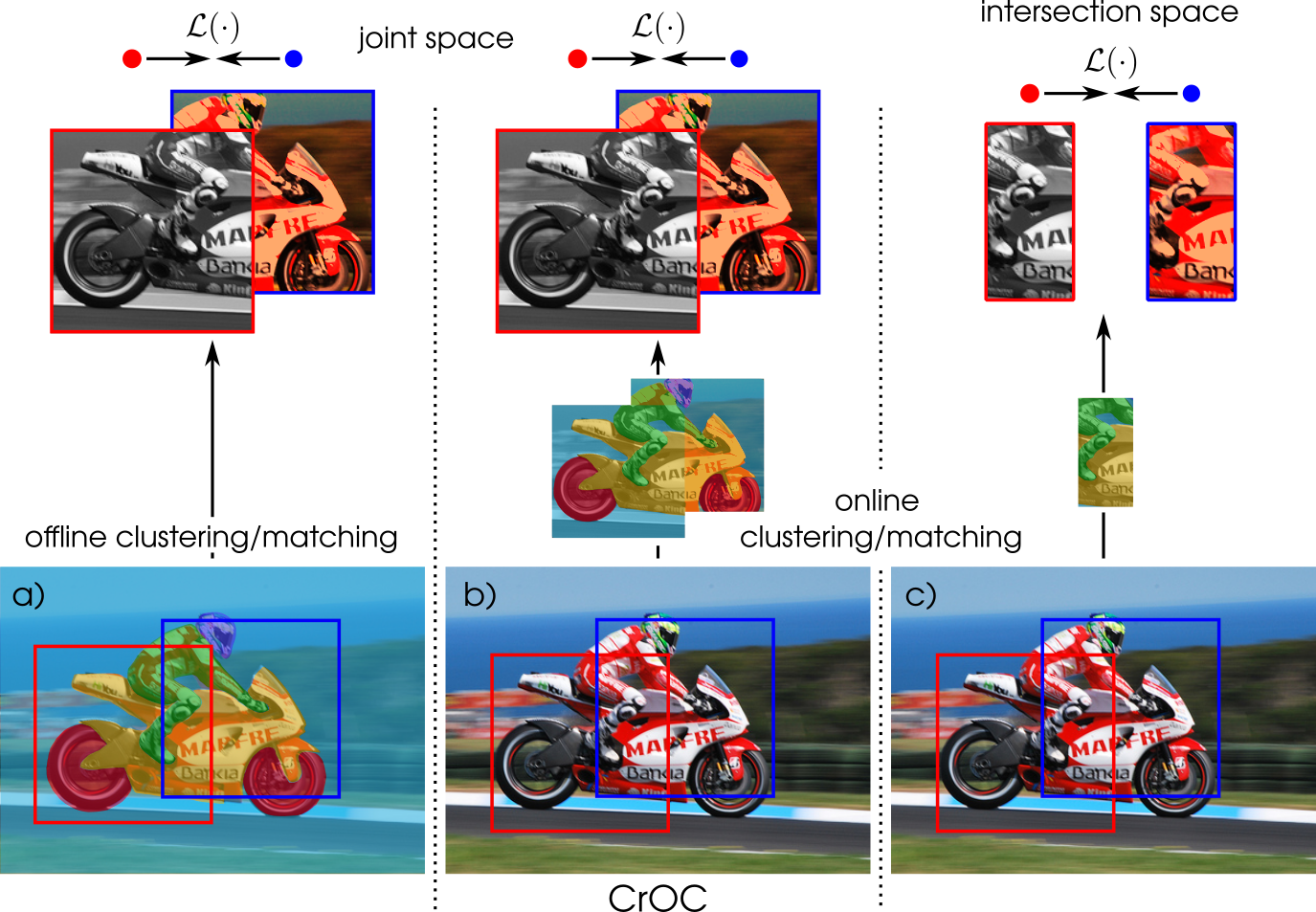

- Paper ‘Cross-View Online Clustering for Dense Visual Representation Learning.’

Research facilitated by the LUMI supercomputer, owned by the EuroHPC Joint Undertaking, hosted by CSC (Finland) and the LUMI consortium. This paper was accepted for the "Conference on Computer Vision and Pattern Recognition (CVPR)", one of the best conferences in machine learning/computer vision.

- Paper ‘Adaptive Similarity Bootstrapping for Self-Distillation.’

Research facilitated by the LUMI supercomputer, owned by the EuroHPC Joint Undertaking, hosted by CSC (Finland) and the LUMI consortium.

Footnotes

[1] For example, imagine you want to teach a computer to recognize pictures of cats. Instead of telling the computer exactly what a cat looks like, you would give it a bunch of pictures of cats and tell it to look for common features, like pointy ears and whiskers. The computer would then use that information to identify other pictures of cats that it hasn't seen before. The more pictures of cats the computer sees, the better it gets at recognizing them. This is because the computer is learning from experience and adjusting its algorithms to get better at the task.

[2] More information: https://lumi-supercomputer.eu/

[3] LUMI News article: ‘Second Pilot phase LUMI in full swing’

Published on 24/08/2023

Tim Lebailly (PSI, KU Leuven)

Tim Lebailly obtained his master’s degree in Data Science from the Swiss Federal Institute of Technology Lausanne (EPFL) in 2021. He is currently pursuing a PhD at the PSI lab (Processing Speech and Images) of the KU Leuven) under the supervision of Prof. Tinne Tuytelaars. His research interests lie mostly at the intersection of Computer Vision and Machine learning.