EuroHPC AI & Data-Intensive Applications Access Call - Apply now

Description

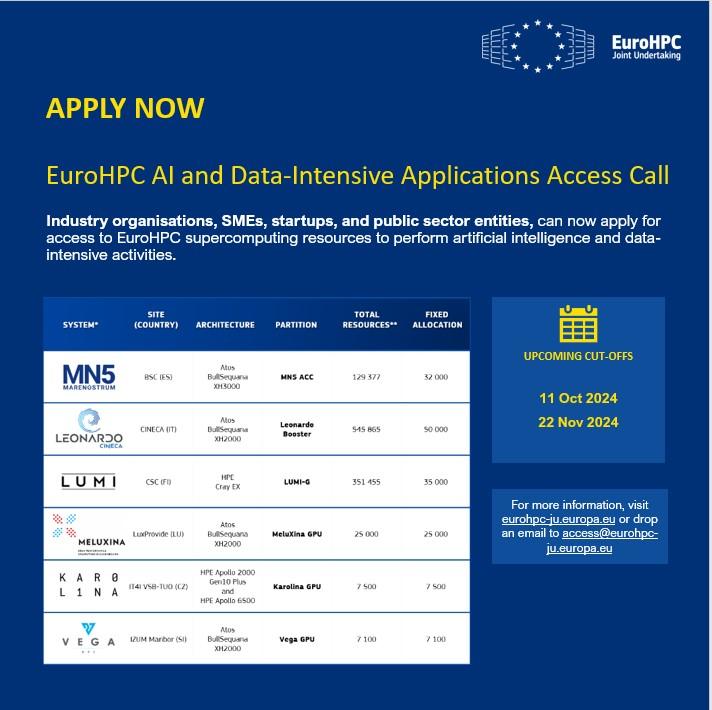

The EuroHPC JU AI and Data-Intensive Applications A

ccess call aims to support ethical artificial intelligence, machine learning, and in general, data-intensive applications, with a particular focus on foundation models and generative AI (e.g. large language models).

The call is intended to serve industry organisations, small to medium enterprises (SMEs), startups, as well as public sector entities, requiring access to supercomputing resources to perform artificial intelligence and data-intensive activities.

Call Deadline

The call is continuously open, with pre-defined cut-off dates that will trigger the evaluation of the proposals submitted up to this date.

Next Cut-Off Dates:

- 11 October 2024

- 22 November 2024

Don't miss out on this opportunity! Apply now and harness the power of supercomputing for your AI projects.

? Learn more: https://eurohpc-ju.europa.eu/eurohpc-ju-access-call-ai-and-data-intensive-applications_en

? Questions? Email: access [ati] eurohpc-ju [dota] europa [dota] eu (access[at]eurohpc-ju[dot]europa[dot]eu)

Published on 30/09/2024