User Story Making LLMs work for Dutch - Research, Challenges and the Power of HPC

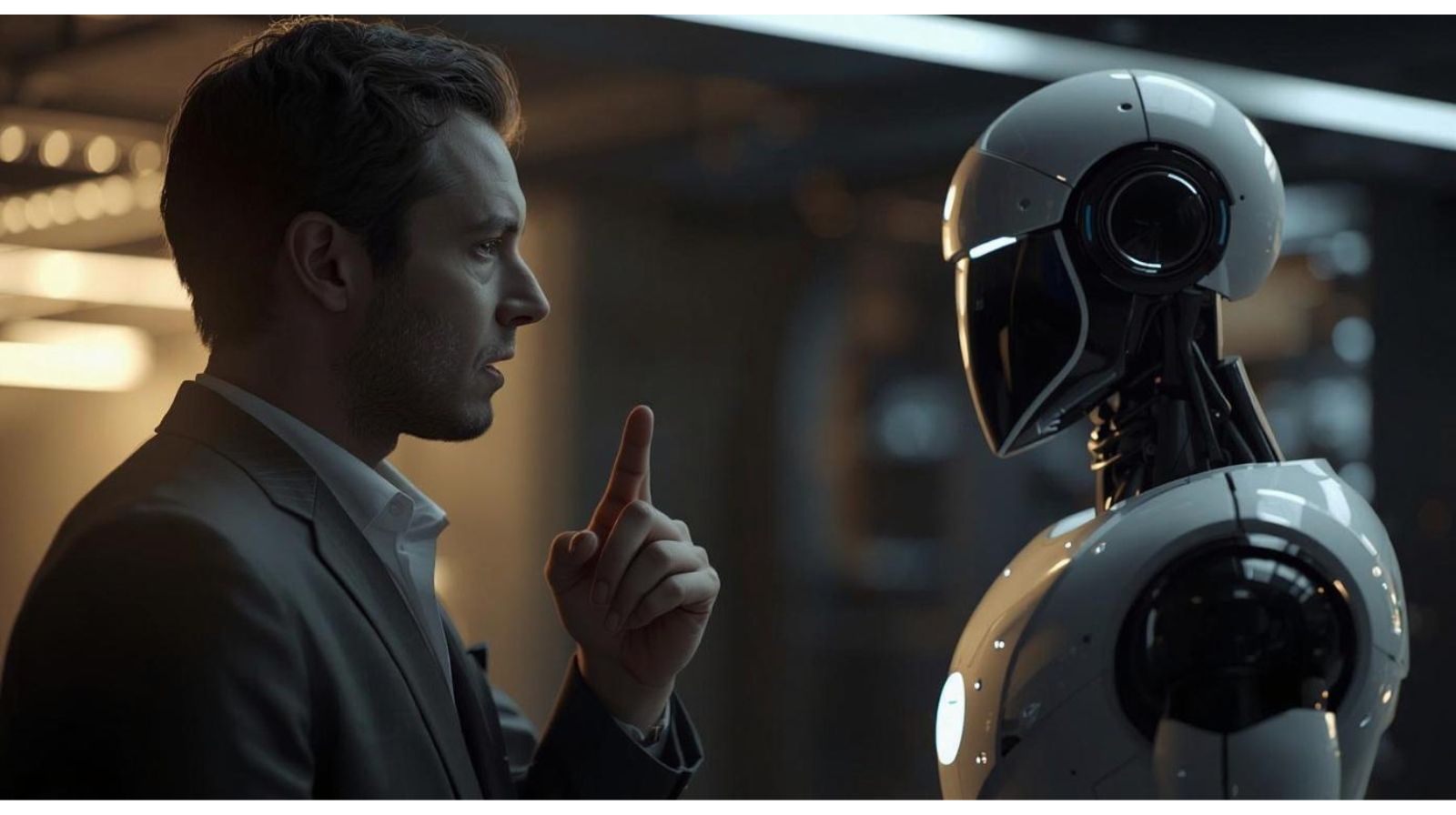

Why AI needs to speak Dutch

Generative AI is a type of artificial intelligence designed to create new content, including text, images, music, and code. Essentially, it "generates" things. For example, ChatGPT generates text while Dall-E creates images. Within this category, Large Language Models (LLMs) focus specifically on human language, and are increasingly integrated into everyday technology. Most devices, like cars and phones, now come equipped with AI assistants, and many customer service interactions involve chatbots. Additionally, companies use AI to analyse customer reviews and gain insights into consumer behaviour, while governments utilise LLMs to monitor the spread of information and detect hate speech.

But what happens when you try to use these systems in another language, say Dutch? The performance of LLMs in languages other than English is often inferior to their performance in English. And that’s exactly where the LT³ Language and Translation Team at Ghent University [1]comes in.

Why Language Bias in AI Matters

LLMs tend to be biased toward English or other well-resourced languages because they are trained on massive amounts of English data and much smaller quantities of data in other languages. As a result, their performance on low(er)-resource languages, such as Dutch or dialects like Limburgish or West Flemish, is often lacking. Since dedicated AI models for these languages usually don’t exist, people are left using one-size-fits-all tools that don’t serve them well.

That’s why the LT³ team focuses on improving the performance of large language models in Dutch.

Prof. Veronique Hoste, specialised in machine learning for natural language and director of the LT³ Language and Translation Technology Team, emphasises the importance of focusing on Dutch to promote inclusivity in language models. Prof. Hoste also points out that current language models are heavily English- and Anglo-centric, both linguistically and in terms of world knowledge, which often limits their usefulness for applications in languages like Dutch.

Prof. Hoste: “A recent PhD project on irony detection in Dutch showed that generative models, even multimodal ones, contain very little Dutch data compared to English. When asked to explain an ironic Dutch tweet, the models struggled, while the same task in English worked much better. This highlights why it is crucial to focus on less-resourced languages like Dutch, which, although not truly under-resourced, still lags far behind English in these systems.”

Prof. Hoste: “I believe leveraging technology like ChatGPT in various contexts can be beneficial. While it performs well in many applications, it struggles with culturally specific tasks, like question answering and recognising local names and organisations. This cultural sensitivity is crucial for effective human-machine interaction. It's important to incorporate knowledge of the Dutch language into these systems. Otherwise, users may become frustrated if the system fails to meet their needs, which would be unfortunate. Tailoring technology for Dutch could significantly improve its effectiveness.”

From Sentiment to Sensitivity: Real-World Research Applications

Prof. Hoste and her team’s research has led to a wide range of practical applications, such as automatically detecting sentiment in online reviews (i.e., determining whether a sentence expresses a positive or negative opinion). Another critical application involves understanding more fine-grained emotions, which are recognised to be culturally driven and context-dependent. The way we discuss emotions can vary significantly from one culture to another, and understanding this nuance is crucial for effective customer relationship management.

This line of research on sentiment detection also laid the foundation for Alfasent, a commercial spin-off [2].

VSC is Key to Performing Research

A Large Language Model is called large because it has many parameters (billions or even trillions). The model adjusts these parameters to get better at understanding and generating language. The more parameters a model has, the smarter and more accurate it tends to be.

Prof. Els Lefever, associate professor at LT3: “Even the smaller generative models comprise at least 7,000,000,000 parameters, while recent GPT models contain as many as 1.8 trillion parameters. Just loading these models is impossible on a personal computer, let alone training them on large datasets. The HPC infrastructure is key to our experimental work on LLMs for Dutch.”

Currently, the spin-off Alfasent focuses primarily on text, while the LT³ team is increasingly turning toward multimodality. Prof. Hoste explains that in current online communication, text is increasingly paired with images that convey additional information or subtle nuances, highlighting the need for a multimodal approach:

Prof. Hoste: “Emotions are often expressed through various modalities, which is essential to our ongoing research goals. To achieve this, we rely heavily on supercomputers as we work with textual, speech, and video data, significantly increasing both the data we handle and the processing power required.”

Els Lefever, associate professor at LT3, adds: “The computing power of the VSC is essential for conducting our research. We aim to train our models and potentially fine-tune them with new data. Still, our existing equipment, including individual laptops and servers at the lab, lacks the capacity to run these experiments effectively. To properly train, fine-tune, and evaluate large language models and other complex models, we greatly need the computational capabilities offered by the VSC.”

Training Large Language Models

To train a large language model, you start by collecting a wide variety of raw text data, like newspapers, social media posts, and other written materials. This helps the model learn the patterns, structures, and context across language use. The next step is to tailor the model for specific tasks, like detecting irony, sentiment, hate speech or disinformation. For this, a smaller set of labelled data is used, where humans identify specific characteristics, for example, whether or not a social media post can be classified as hate speech. This two-step process allows the model to first develop a broad understanding of language before specialising in specific applications.

A Dutch-focused solution: mBert and the distillation method

As an alternative to training models for each under-resourced language, researchers rely on what are deemed as “multilingual models”, which are trained on large amounts of languages (often 100+). This benefits the lower-resourced languages since the model learns some key information from other languages and can internally transfer it to other languages. Large multilingual systems are challenging to use in practice. They are huge, require a lot of computing power and memory, and are slow, which makes them unsuitable for phones, low-end computers, or regions with limited technical infrastructure. Moreover, as mentioned earlier, they tend to be biased toward English.

Researchers Pranaydeep Singh, Aaron Maladry & Loic De Langhe started from the large language model mBERT [3], which served as a teacher model and used only Dutch-language data to train a smaller model (student model) using the distillation method. This student model was evaluated on real-world language tasks such as sentiment analysis and part-of-speech tagging (labelling words as nouns, verbs, etc.).

Pranaydeep Singh, researcher at LT3: “The results were impressive. The new Dutch-specific model performed as well as or even better than mBERT on Dutch tasks, while being three times smaller and six times faster [4]. This makes AI more accessible and useful for Dutch-speaking users, especially in resource-constrained environments.”

If you are wondering why the model only shrunk by a factor of three rather than 100, Prof. Lefever explains: “Most of the knowledge in these large models isn’t neatly divided by language. Instead, the model learns general patterns about how words relate to each other — like figuring out that “apple,” “pear,” and “cherry” are related — without ever really “understanding” what they are. This knowledge is shared across languages. Because of this cross-lingual learning, you cannot simply discard 99% of the model and retain only the Dutch part. The Dutch language actually benefits from the shared patterns learned from larger languages like English, French, or German. If you cut it down too much, you lose that general knowledge, and the model would stop working well. That’s why the distilled Dutch model is only about three times smaller, not a 100 times smaller.”

Inclusivity and dialects: The next frontier

Prof. Hoste: “In Flanders, we are enhancing automatic speech recognition (ASR) systems to better understand Flemish ‘tussentaal’ and dialects. Our team has also developed syntactic analysis systems to address unique dialect features, such as repetition in phrases like ‘khebbekik.’ With fine-tuning, our systems are proving effective in navigating these complexities, which is essential for making language and speech technology more linguistically and culturally sensitive.”

Prof. Lefever: “It is furthermore crucial to acknowledge the absence of benchmarks for assessing multimodal large language models, particularly regarding the capabilities of multilingual and Dutch language models in relation to Flemish.”

Prof. Hoste: “In NLP, it has indeed been shown that 'Not all languages are created equally.” We therefore recently submitted a proposal to develop a comprehensive Flemish benchmark dataset through the Flanders AI program. This dataset will represent the language as written and spoken across different regions of Flanders, enabling to gain a reliable understanding of the capabilities of LLMs on their question answering or entity detection capabilities on genuine Flemish data.”

Ultimately, this work strives to make AI not just to understand and speak Dutch, but also to understand and speak like the people who actually live here.

Making AI More Human and More Local

Prof. Hoste concludes: “If we aim to develop models that focus on emotion generation or empathy in human-machine interaction, then we need to ensure these models are fine-tuned, for instance, on Dutch. This way, they can express empathy in a culturally relevant manner. It's vital to incorporate these cultural nuances into system design.”

In a world where AI is becoming increasingly embedded in daily life, it’s critical that these systems reflect the diversity of language and culture. Thanks to the work of researchers like Prof. Hoste and her team, and the support of Belgium’s computing infrastructure, AI is learning to speak Dutch, including dialects.

Published on 13/10/2025

[1] Language and Translation Technology Team - Ghent University

[2] SENTEMO Project (January 2021- December 2022) "Multilingual aspect-based sentiment and emotion analysis"

[3] mBERT takes up around 10880 Megabytes of GPU memory while the student model takes up around 2944 Megabytes.

[4] mBERT has around 167 million parameters and an inference speed of 0.384 per second (with a batch size of 32 and sequence length of 512), while under the same settings the models created by Pranaydeep Singh and his fellow researchers have 66 million parameters and an inference speed of 0.066 per second.

Prof. Veronique Hoste

Veronique Hoste is Senior Full Professor of Computational Linguistics at the Faculty of Arts and Philisophy at Ghent University. She is department head of the Department of Translation, Interpreting and Communication and director of the LT3 language and translation team at the same department. She holds a PhD in computational linguistics from the University of Antwerp (Belgium) on "Optimization issues in machine learning of coreference resolution" (2005).

Prof. Els Lefever

Els Lefever is an associate professor at the LT3 language and translation technology team at Ghent University. She has a strong expertise in machine learning of natural language and multilingual NLP, with a special interest for computational semantics, irony and hate speech detection, argumentation mining, complex reasoning, and ancient language processing.

Pranaydeep Singh

Pranaydeep is a post-doctoral researcher working on Mechanistic Interpretability for Multilingual Models. He has previously worked on Cross-Lingual and Low resource NLP, Interpretability, Transfer Learning. In the past, he has also worked on Multi-modal analysis of memes, Aspect-based Sentiment Analysis and Large Scale Document Layout Understanding.

Aaron Maladry

Aaron Maladry is a postdoctoral researcher at the LT3 research group with a background in Slavic language and a PhD on Computational Linguistics. In his recent work, Aaron investigated how language models detect and interpret irony and dedicated particular attention to the role of world knowledge in explaining figurative language.

Loic De Langhe

Loic De Langhe is a postdoctoral researcher in the LT3 research group at Ghent University. He holds degrees in Computational Linguistics and Artificial Intelligence (AI). His research interests include computational approaches to discourse and syntax, as well as Information Retrieval (IR).